How to Measure the Effectiveness of Condition Monitoring

Condition monitoring should never be limited to a single technology or method. Instead, it should combine and integrate an optimum selection of purposeful tools and tasks. Condition monitoring can be largely technology based but can also be observation or inspection based.

Terms and Definitions

- Reportable Condition — This is an abnormal condition that requires correction. A reportable condition could be either a root cause or an active failure event or fault.

- Proactive Domain — This is the period of time when there is a reportable root cause condition but no significant loss of machine life has occurred. Unless detected and corrected, the condition will advance to the predictive domain.

- Predictive Domain — This follows the proactive domain and is also known as the failure development period. The predictive domain begins at the inception of a reportable failure condition (e.g., severe misalignment) or fault and ends at the approaching end of operational service life.

- RUL — Remaining useful life is an estimate of the remaining service life of a machine when an active wear or failure condition has been detected and remediated. Machines start with an RUL of 100 percent. As they age and wear occurs, the RUL approaches zero.

- Root Cause (RC) Saves — Root cause saves is the percent of reportable conditions that were detected and remediated in the proactive domain. The higher this number the better. All RC saves leave RUL unchanged.

- Predictive Saves — This refers to reportable conditions that have advanced to the predictive domain and are detected and remediated prior to operational failure. The RUL of the machine was lowered during the time the reportable condition remained undetected and uncorrected in the predictive domain.

- X — This is a timeline point when a reportable condition (e.g., root cause of a fault) is detected and remediated. It also represents operational failure when not detected in the proactive or predictive domains.

- Misses — Misses refer to the percentage of reportable conditions that advance to an undetected operational failure. The lower this number the better.

- Overall Condition Monitoring Effectiveness (OCME) — This metric defines the overall effectiveness of condition monitoring (inspection combined with technology-based condition monitoring). This is quantified as the average change in percent of remaining useful life (RUL) across all machines and reportable conditions during the reporting period. The higher this number, the more effective condition monitoring is at detecting and correcting reportable conditions early.

- Condition Monitoring Interval — This refers to the time interval between technology-based condition monitoring events (vibration, oil sampling, thermography, etc.).

- Condition Monitoring Intensity — This refers to the number of condition monitoring technologies in use and the intensity of their use. For example, an oil analysis test slate involving numerous tests with skillful data interpretation would be referred to as intense.

- Inspection Interval — This refers to the time interval between machine inspections by operators and technicians.

- Inspection Intensity — This refers to the number of inspection points and the examination skills of the inspector.

Most machines share condition monitoring and inspection needs with many other types of equipment. This is because they have components and operating conditions in common, i.e., motors, bearings, seals, lubricants, couplings, etc. At the same time, their operating conditions and applications may demand unique inspection requirements. These influence failure modes and machine criticality.

As discussed in previous columns, inspection should be viewed with the same serious intent as other condition monitoring practices. In my opinion, a world-class inspection program should produce more “saves” than all other condition monitoring activities combined. It’s not an alternative to technology-based condition monitoring but rather a strategic and powerful companion.

The technologies of infrared thermography, analytical ferrography, vibration, motor current and acoustic emission are generally used to detect active faults and abnormal wear. Conversely, a well-conceived inspection program should largely focus on root causes and incipient (very early stage) failure conditions. Detection of advanced wear and impending failure is secondary.

Remedy to Condition Monitoring Blindness

Consider this: How could any of the mentioned condition monitoring technologies detect the sudden onset of the following?

- Defective seal and oil leakage

- Filter in bypass

- Coolant leak

- Air-entrained oil

- Oil oxidation

- Varnish

- Impaired lubricant supply (partial starvation)

- Bottom sediment and water (BS&W)

- Defective breather or vent condition

Even if the technologies could detect these reportable conditions, this ability is constrained by the condition monitoring schedule. For instance, consider a condition monitoring program that is on a monthly schedule and conducted the first day of each month. If the onset of a reportable abnormal condition occurs the following day, it goes undetected by technology-based condition monitoring until the next month (up to 30 days later).

You could say that inspection provides the eyes and ears for everything that condition monitoring can’t detect and is a default detection scheme during the intervening days when no technology-based condition monitoring occurs. In other words, inspection fills in critical gaps where there is detection blindness of the technologies and schedule blindness for the time periods between use. Higher inspection frequency and more intense examination skills (by the inspector) significantly increase condition monitoring’s ability to detect root causes and symptoms of various states of failure.

Build a Condition Monitoring Team

Condition monitoring should be a team effort. As with most teams, each member contributes unique and needed skills to enhance the collective capabilities of the team. One member cannot or should not do the tasks of others. In American football, you can’t turn a linebacker into a quarterback. While the team members are different, they are all working toward a common goal.

Managing a condition monitoring program is a team-building activity. You have your “A” players and your “B” players. Some are generalists, and some are specialists. You have leaders, and you have followers. All the classical elements are there. The condition monitoring team includes people (inspectors, analysts, etc.), technologies (vibration, portable particle counters, infrared cameras, etc.) and external service providers (an oil analysis lab, for instance).

Overall Condition Monitoring Effectiveness (OCME)

Team performance requires one or more metrics aligned to well-defined goals. Some metrics are micro (e.g., vibration overalls or lubricant particle counts), while others are macro to capture overall team performance. In reliability, macro metrics might look at the cost of reliability (team spending) and the overall level of reliability achieved (by condition monitoring and other activities).

Team performance requires one or more metrics aligned to well-defined goals. Some metrics are micro (e.g., vibration overalls or lubricant particle counts), while others are macro to capture overall team performance. In reliability, macro metrics might look at the cost of reliability (team spending) and the overall level of reliability achieved (by condition monitoring and other activities).

To illustrate the concept of a macro metric, I am introducing Overall Condition Monitoring Effectiveness (OCME). This somewhat theoretical metric drives home several important points. OCME is defined by the overall effectiveness of condition monitoring (inspection combined with technology-based condition monitoring) in detecting root causes and early detection of failure symptoms.

This is quantified as the average percentage of remaining useful life (RUL) across all machines and reportable conditions during the reporting period (say, one year). The higher this number, the more effective condition monitoring is in detecting and correcting reportable conditions early. Machines that have no reportable conditions or failures are not included in this metric. A perfect OCME score is 100, meaning the RUL across all machines from the beginning of the reporting period to the end was unchanged. This can be normalized to total machine operating hours (for the entire group of machines included in the OCME metric).

To show how the OCME works, let’s look at three examples. Refer to the sidebar below for definitions of the terms used. Each of the three cases considers different condition monitoring and inspection intervals (frequency) and intensity. Again, intensity refers to the skill and effectiveness of condition monitoring and inspection tasks.

To show how the OCME works, let’s look at three examples. Refer to the sidebar below for definitions of the terms used. Each of the three cases considers different condition monitoring and inspection intervals (frequency) and intensity. Again, intensity refers to the skill and effectiveness of condition monitoring and inspection tasks.

Ten hypothetical reportable conditions are used in each case. These could be misalignment, unbalance, hot running bearings, high wear debris, wrong oil, lubricant starvation, water contamination, etc. Reportable conditions detected in the proactive domain are considered to have 100 percent RUL. Operational failure means zero percent RUL. Those conditions detected early in the predictive domain have a higher RUL than those approaching operational failure. The beginning point of the predictive domain is the inception of failure.

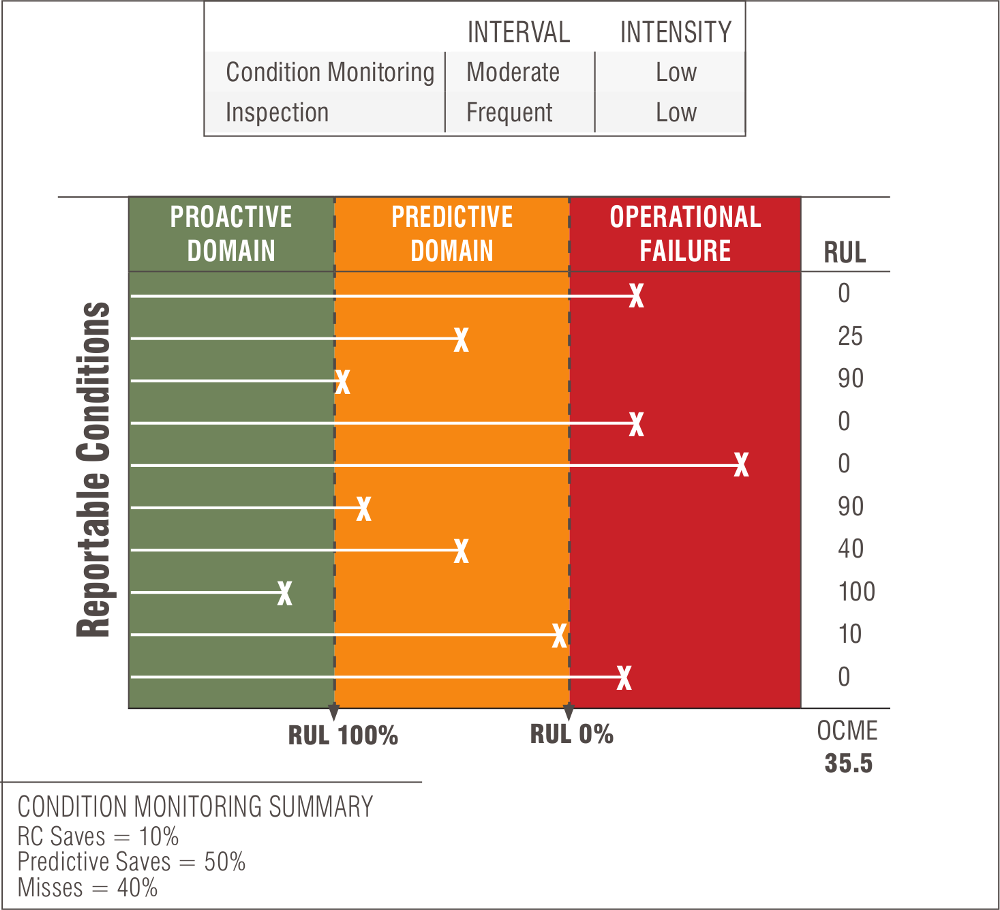

Case #1

Case #1: Common Intervals at Low Intensity In this scenario, very few of the reportable conditions are detected in the proactive domain (at the root cause stage). Most conditions advance to the predictive domain or operational failure. The causes of this are low skill and intensity of the condition monitoring and inspection tasks. The RUL of each reportable condition is estimated and tallied up to derive the OCME score, which is 35.5 in this case. Some 40 percent of the reportable conditions were misses, and only 10 percent were root cause saves.

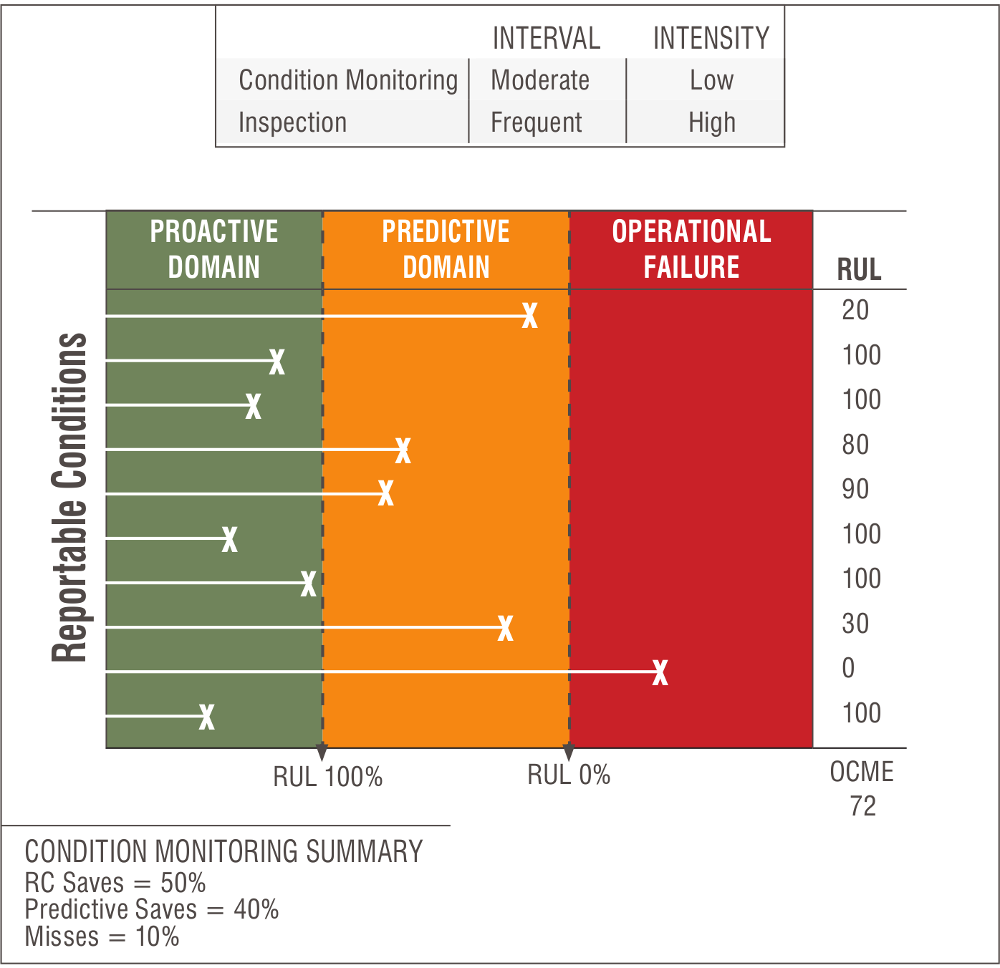

Case #2

Case #2: Common Intervals at High Inspection Intensity This case is the same as the first with the exception of the inspection skill and competency (high intensity). This dramatically affects the OCME (score of 72). Instead of 10 percent root cause saves, we now have 50 percent and only 10 percent misses.

Case #2: Common Intervals at High Inspection Intensity This case is the same as the first with the exception of the inspection skill and competency (high intensity). This dramatically affects the OCME (score of 72). Instead of 10 percent root cause saves, we now have 50 percent and only 10 percent misses.

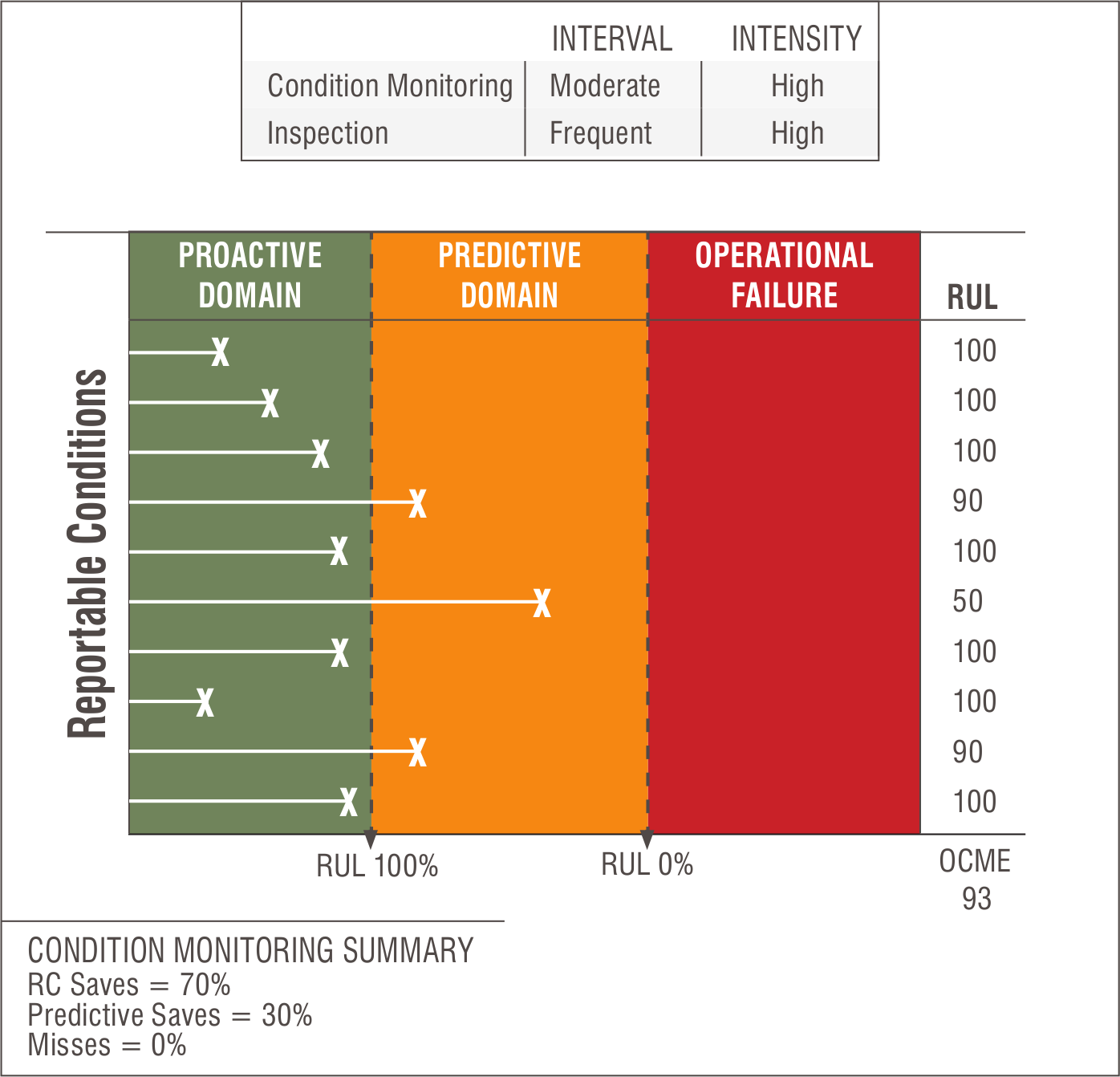

Case #3

Case #3: World-class Condition Monitoring and Inspection This case applies high inspection frequency and intensity for both technology-based condition monitoring and inspection tasks. At this high level of surveillance, most all reportable conditions are detected and remediated in the proactive domain (70 percent root cause saves). All others are detected early in the predictive domain. This translates to an impressive OCME score of 93 across all machines and reportable events.

Optimization

It would be negligent to conclude this column without a short reminder about optimization. There is a cost to condition monitoring, as we all know. This cost is influenced by frequency and intensity. The optimum reference state (ORS) for condition monitoring and inspection must be established. Our objective is to optimize the OCME in the context of machine criticality and failure mode ranking. I’ve addressed this subject extensively in past columns. Please refer to my Machinery Lubrication articles on the ORS, overall machine criticality (OMC) and failure modes and effects analysis (FMEA).

As a final note, my reference to intensity should not be glossed over as unimportant. It is a driving factor to boosting the OCME score. Achieving condition monitoring and inspection intensity has as much to do with culture as it does with the available budget or access to technology. Training and management support define the maintenance culture. These soft, human factors require a high level of attention to achieve excellence in lubrication, reliability and asset management.